Introduction: The Convergence of AI and Semiconductors

Artificial intelligence (AI) is no longer a niche technology; it is a driving force reshaping industries, economies, and global technological leadership. From autonomous vehicles to large language models and generative AI, the demand for high-performance computing has skyrocketed. This surge has intensified competition within the semiconductor industry, a sector that supplies the critical hardware underpinning modern AI workloads.

The relationship between AI and semiconductors is mutually reinforcing. On one hand, AI algorithms require unprecedented computing power, driving innovation in chips, accelerators, and memory systems. On the other hand, semiconductor companies are racing to design hardware optimized for AI workloads, creating a high-stakes market where performance, efficiency, and scalability determine success.

This article explores the current state of AI-driven semiconductor competition, the key technologies shaping the market, leading players and their strategies, geopolitical implications, and future trends.

1. The AI Boom and Its Impact on Semiconductor Demand

1.1 Exponential Growth of AI Workloads

The AI revolution has created an exponential increase in computational demands. Tasks such as training deep neural networks, inference for real-time AI applications, and multimodal data processing require high-performance processors capable of handling massive parallel computations.

For example, large-scale transformer models like GPT or PaLM demand thousands of GPUs and petabytes of memory bandwidth, pushing traditional CPU-centric architectures to their limits. This growing need has accelerated the development of specialized hardware, including:

- GPUs (Graphics Processing Units) – Initially designed for rendering graphics, now adapted for AI training and inference.

- TPUs (Tensor Processing Units) – Custom accelerators by Google for efficient tensor operations.

- FPGAs (Field-Programmable Gate Arrays) – Flexible, low-latency hardware used in edge AI and specialized workloads.

- ASICs (Application-Specific Integrated Circuits) – Tailored for AI workloads, achieving optimal performance-per-watt ratios.

1.2 Semiconductor Market Expansion

The surge in AI adoption has directly impacted semiconductor revenue streams. According to recent market reports, AI-specific chips are projected to account for an increasing percentage of the overall semiconductor market, with growth rates surpassing general-purpose CPUs. Key drivers include:

- Cloud computing services expanding AI offerings

- Consumer devices incorporating AI features

- Edge AI applications requiring efficient inference chips

The market expansion has intensified competition among semiconductor firms, creating a race for both technology leadership and market share.

2. Key Technologies Driving AI Semiconductors

2.1 GPU Acceleration

Graphics Processing Units (GPUs) remain the cornerstone of AI computation. Their architecture supports massive parallelism, which is ideal for matrix multiplications and tensor operations at the heart of deep learning algorithms.

Leading GPU manufacturers, such as NVIDIA and AMD, continue to invest heavily in AI-optimized designs:

- NVIDIA A100 and H100 GPUs support high-throughput AI training workloads with innovations in tensor cores and NVLink interconnects.

- AMD Instinct accelerators focus on efficient compute and memory bandwidth for data-center AI deployments.

2.2 Specialized AI Accelerators

Beyond GPUs, AI accelerators are gaining traction due to their efficiency and performance:

- TPUs (Tensor Processing Units): Designed by Google, TPUs accelerate neural network operations, especially matrix multiplications and convolutions.

- ASICs for AI: Companies such as Graphcore, Cerebras, and Tenstorrent develop custom chips tailored to AI workloads, offering energy-efficient high-performance solutions.

- FPGAs: Popular in edge AI applications, FPGAs offer adaptability for tasks requiring low latency and hardware-level customization.

2.3 Memory and Bandwidth Innovations

AI workloads are extremely memory-intensive, necessitating innovations in high-bandwidth memory (HBM), DDR5, and advanced interconnects. High-performance memory architectures reduce bottlenecks in AI training and inference pipelines, enabling faster computation at scale.

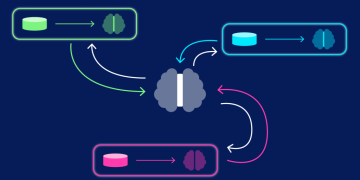

2.4 Heterogeneous Computing

Modern AI systems often combine CPUs, GPUs, TPUs, and other accelerators in heterogeneous architectures. This approach maximizes efficiency for different stages of AI workflows, such as:

- Data preprocessing (CPU-intensive)

- Matrix operations (GPU/TPU-intensive)

- Sparse operations and inference optimization (ASIC/FPGA-intensive)

Heterogeneous computing has become a competitive differentiator for semiconductor companies targeting AI markets.

3. Competitive Landscape in AI Semiconductors

3.1 Leading Players

The AI semiconductor market is dominated by a combination of tech giants and specialized startups:

- NVIDIA: Market leader in AI GPUs, also expanding into AI software ecosystems like CUDA and NVIDIA AI Enterprise.

- Intel: Focusing on Xe GPUs, Habana Labs accelerators, and heterogeneous AI platforms.

- AMD: Competes in GPU acceleration for AI training and inference.

- Google: Developer of TPUs, driving performance efficiency in Google Cloud AI.

- China-based players: Companies like Cambricon and Huawei HiSilicon target domestic AI chip markets with government support.

Startups such as Graphcore, Cerebras, and Tenstorrent are pushing innovation with unique architectures that challenge traditional GPU dominance.

3.2 Strategies for Market Leadership

Semiconductor firms employ several strategies to gain competitive advantage in AI:

- Hardware-software co-design: Optimizing chips alongside frameworks for maximum AI efficiency.

- Ecosystem building: NVIDIA’s software libraries (CUDA, cuDNN) create a lock-in effect for developers.

- Vertical integration: Companies like Google leverage their hardware for cloud AI services, creating synergies between infrastructure and AI workloads.

- Geographic diversification: Expanding manufacturing and R&D capabilities globally to mitigate supply chain risks and geopolitical uncertainties.

4. Geopolitical and Supply Chain Implications

4.1 Semiconductor as a Strategic Asset

AI chips have become critical to national security and technological leadership. Governments are investing heavily to secure domestic semiconductor supply chains. For instance:

- The U.S. CHIPS Act allocates billions for semiconductor manufacturing and R&D.

- China is accelerating its self-sufficiency programs in AI chip design.

- The EU has initiatives to bolster local semiconductor production.

These investments are not only commercial but also strategic, reflecting the high stakes of AI supremacy.

4.2 Supply Chain Challenges

Global semiconductor supply chains face bottlenecks due to:

- Limited foundry capacity for advanced nodes (e.g., TSMC 3nm, Samsung 3nm)

- Material shortages (high-purity silicon, rare-earth elements)

- Geopolitical tensions impacting cross-border trade

AI demands exacerbate these challenges, creating pressure on manufacturers to scale production while maintaining quality and reliability.

5. Future Trends in AI and Semiconductors

5.1 Next-Generation Chip Architectures

Emerging chip designs focus on:

- Sparse and low-precision computation for efficiency

- Neuromorphic computing inspired by brain architecture

- In-memory computing to reduce latency and energy consumption

These innovations aim to handle the growing complexity of AI models while improving performance-per-watt ratios.

5.2 AI at the Edge

Edge AI will drive demand for small, efficient AI accelerators, enabling real-time processing for autonomous vehicles, IoT devices, and industrial robots. Edge chips require energy efficiency without compromising inference performance.

5.3 Software-Defined AI Hardware

Software abstraction layers will increasingly define hardware efficiency. Optimized frameworks, compilers, and runtime environments will become crucial in exploiting AI chip capabilities fully.

5.4 Sustainability in AI Semiconductor Production

Environmental impact is becoming a key consideration. Energy-efficient chip design, green manufacturing processes, and minimizing carbon footprint in AI datacenters will be major differentiators for leading semiconductor companies.

Conclusion

The convergence of AI and semiconductors has created one of the most competitive and strategic technology markets of the 21st century. The demand for high-performance, energy-efficient chips is driving innovation across GPUs, TPUs, ASICs, and FPGAs, while geopolitical tensions and supply chain dynamics intensify competition globally.

Semiconductor firms are no longer just component suppliers—they are strategic enablers of AI breakthroughs, defining the pace of innovation in autonomous systems, generative AI, and cloud intelligence. Companies that can balance performance, efficiency, and ecosystem support will dominate the market, while nations that secure semiconductor production and AI infrastructure will maintain technological leadership.

As AI workloads continue to grow and diversify, the race for superior AI semiconductors is likely to intensify, driving innovation, reshaping supply chains, and determining the global balance of technological power.