Introduction: A New Era of Computation

The digital world is undergoing a profound transformation, driven largely by artificial intelligence (AI) and machine learning. AI is no longer just a tool for research and development—it has become a critical component in industries ranging from healthcare and finance to automotive and entertainment. However, as AI models become more complex, the demand for computational power has skyrocketed, creating an urgent need for new AI chips and innovative hardware architectures.

To meet these demands, a new breed of AI chips is emerging, along with heterogeneous architectures that combine different types of processing units to deliver optimal performance. These innovations are revolutionizing the way computation is done, enabling faster processing times, more efficient energy usage, and the ability to solve complex problems that were once thought to be beyond reach.

In this article, we will explore how AI chips and heterogeneous architectures are driving the ongoing computational power revolution, looking at the core technologies, industry trends, and challenges involved in this rapid evolution.

1. The Rise of AI and the Need for Advanced Computational Power

1.1 The Role of AI in Modern Industries

Artificial intelligence has already proven its utility in a wide range of sectors, including:

- Healthcare: AI models are being used to analyze medical images, predict patient outcomes, and even discover new drugs. AI is also improving the accuracy of diagnostic tools and personalized medicine.

- Autonomous Vehicles: In the automotive sector, AI powers self-driving cars by processing large amounts of data from sensors and cameras in real time to navigate complex road conditions.

- Finance: AI is used for fraud detection, algorithmic trading, and customer service in the finance industry, processing vast amounts of transactional data at lightning speeds.

- Entertainment and Media: AI-driven recommendations in platforms like Netflix and Spotify are reshaping the way content is consumed, while AI tools are also being used in game development and content creation.

For AI to continue progressing in these domains, it requires computational power capable of handling the massive volumes of data involved, alongside complex algorithms that must be processed in real time. This has sparked a race to develop more powerful, efficient, and scalable chips.

1.2 Traditional Computing Struggles with AI Demands

While traditional central processing units (CPUs) have served well for general-purpose computing, they are not well-suited for the highly parallel nature of AI tasks. Many AI algorithms, such as neural networks, require handling vast amounts of data simultaneously, which is a challenge for CPUs that are optimized for sequential task execution.

This mismatch in design has led to the development of specialized hardware designed specifically for AI workloads. Graphics processing units (GPUs), tensor processing units (TPUs), and field-programmable gate arrays (FPGAs) have emerged as the leading hardware platforms for AI, offering higher throughput and efficiency for tasks such as deep learning, image recognition, and natural language processing (NLP).

However, the sheer complexity and scale of modern AI models—especially in fields like deep learning—require even more advanced computational techniques, which is where heterogeneous architectures come into play.

2. AI Chips: The Hardware Backbone of the Future

2.1 What Are AI Chips?

AI chips are specialized hardware designed to accelerate artificial intelligence tasks. These chips are purpose-built for processing the massive datasets and complex computations associated with AI models, offering significant advantages over traditional CPUs.

The major types of AI chips include:

- GPUs (Graphics Processing Units): Originally designed for rendering graphics, GPUs are highly parallelized and are capable of performing many calculations simultaneously. This makes them well-suited for the large-scale matrix multiplications that are common in AI tasks, such as training deep learning models.

- TPUs (Tensor Processing Units): Developed by Google, TPUs are custom-built chips specifically designed for accelerating deep learning workloads. They are optimized for handling tensor computations, which are fundamental to neural networks.

- FPGAs (Field-Programmable Gate Arrays): FPGAs are programmable hardware devices that can be configured to suit specific applications. They offer flexibility and are ideal for customized AI workloads that might not be efficiently handled by GPUs or TPUs.

- ASICs (Application-Specific Integrated Circuits): ASICs are custom-designed chips built for specific applications. For AI, ASICs can be highly optimized for particular AI algorithms, providing high performance and low power consumption.

Each of these chip types offers distinct advantages depending on the specific requirements of the AI task at hand, and the choice of chip can significantly impact the speed, power efficiency, and cost of deploying AI models.

2.2 Performance Advantages of AI Chips

AI chips offer several key advantages over traditional computing hardware:

- Parallelism: AI tasks, especially in deep learning, require the simultaneous processing of vast amounts of data. AI chips, particularly GPUs, are designed to handle massive parallelism, which allows them to process multiple data points simultaneously.

- Efficiency: AI chips are optimized for the types of computations needed in machine learning. This optimization results in higher throughput and lower power consumption compared to general-purpose CPUs, which are not as specialized.

- Scalability: AI chips allow for scaling machine learning models across large datasets and multiple processing units, enabling the training of increasingly sophisticated models.

- Latency: Specialized chips like TPUs can offer lower latency compared to CPUs, making them ideal for applications where real-time or near-real-time processing is critical, such as autonomous driving or real-time speech recognition.

3. Heterogeneous Architectures: Combining the Power of Multiple Processing Units

3.1 What Are Heterogeneous Architectures?

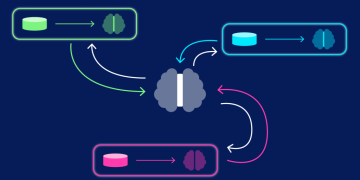

Heterogeneous computing refers to the use of different types of processors in a system to perform different types of tasks more efficiently. Instead of relying on a single type of processor, heterogeneous architectures combine multiple processing units—such as CPUs, GPUs, TPUs, and FPGAs—into a unified system that can perform specialized tasks in parallel.

The key concept behind heterogeneous architectures is that different types of processors excel at different kinds of tasks. By using the best tool for each job, these architectures maximize overall performance and efficiency.

3.2 Key Components of a Heterogeneous System

A heterogeneous system typically includes a combination of the following processing units:

- CPUs (Central Processing Units): CPUs are still the most general-purpose processors, excelling in single-threaded tasks and control logic. In a heterogeneous system, the CPU handles general-purpose computing and system-level operations.

- GPUs (Graphics Processing Units): As mentioned earlier, GPUs are designed for parallel computing, making them ideal for matrix operations and tasks related to deep learning. They handle high-throughput AI computations.

- FPGAs (Field-Programmable Gate Arrays): FPGAs can be customized and reprogrammed to handle specific tasks with great efficiency. In heterogeneous systems, they are often used for tasks requiring low latency and specific optimizations.

- TPUs (Tensor Processing Units): TPUs are specialized for tensor-based operations, which are commonly used in deep learning. In a heterogeneous system, TPUs accelerate the training and inference of AI models.

3.3 Benefits of Heterogeneous Architectures

Heterogeneous systems offer several advantages:

- Optimized Performance: By using different processors for different tasks, heterogeneous systems allow for the most efficient execution of specific workloads. For example, a CPU may handle system-level management and non-parallel tasks, while a GPU handles the parallel computations required for training deep neural networks.

- Energy Efficiency: Specialized chips like TPUs, GPUs, and FPGAs are often much more energy-efficient than general-purpose CPUs, particularly when performing AI-related tasks. By using these processors selectively, heterogeneous systems can achieve higher performance-per-watt.

- Flexibility: Heterogeneous systems are flexible, allowing for the integration of various types of processors depending on the needs of the workload. This adaptability is critical as AI workloads become increasingly diverse.

- Faster Time-to-Insight: By accelerating certain tasks with specialized hardware, heterogeneous architectures reduce the time needed to train AI models, which is crucial for businesses aiming to gain faster insights and stay competitive.

4. Industry Trends: The Future of AI Chips and Heterogeneous Architectures

4.1 Growing Adoption of AI Chips in Data Centers

As AI applications become more widespread, the demand for specialized AI chips in data centers is increasing. Leading cloud service providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud are investing heavily in AI-optimized infrastructure, offering cloud-based TPUs, FPGAs, and GPUs to their customers. These services allow businesses to scale their AI applications without needing to invest in expensive on-premises hardware.

4.2 Integration of AI Chips in Consumer Devices

Beyond cloud data centers, AI chips are also making their way into consumer devices. Smartphones, smart speakers, wearables, and home appliances are increasingly incorporating AI processing capabilities, driven by specialized chips designed to handle tasks such as voice recognition, image processing, and predictive analytics.

4.3 AI Hardware as a Service (AI HaaS)

AI Hardware as a Service (AI HaaS) is an emerging trend in which businesses can lease AI hardware instead of purchasing it outright. This model allows companies to outsource the complexities of AI hardware management while focusing on developing and deploying AI solutions. Major companies in the AI chip market, including NVIDIA, Intel, and Google, are now offering AI-as-a-Service platforms to meet the growing demand.

4.4 The Role of Edge Computing

Edge computing refers to the practice of processing data closer to the source of data generation (such as IoT devices) rather than relying solely on centralized cloud servers. Edge AI chips are designed to perform local AI computations in real time, reducing latency and minimizing the need for data transfer. This is particularly important for applications like autonomous vehicles, where split-second decision-making is crucial.

Conclusion: The Ongoing Revolution in Computational Power

The AI chip market and the advent of heterogeneous architectures are driving a computational power revolution that is transforming industries worldwide. The ability to handle increasingly complex AI models and process massive datasets in real time has far-reaching implications, from improving healthcare outcomes to revolutionizing transportation systems.

As we move forward, innovations in AI hardware will continue to push the boundaries of what’s possible, enabling faster, more efficient AI applications that were previously thought to be unattainable. The combination of AI chips and heterogeneous computing represents the future of computational power, opening the door to new possibilities and accelerating the pace of technological advancement.