Introduction: The Evolution of NLP

Natural Language Processing (NLP) has been at the heart of AI research for decades, enabling machines to understand, interpret, and respond to human language. Traditional NLP focused on language understanding tasks, such as sentiment analysis, named entity recognition, and machine translation. These tasks primarily revolved around analyzing and interpreting text.

The rise of generative AI marks a significant shift. Modern NLP technologies can now generate coherent, contextually relevant, and creative text, images, and even code. This evolution has been driven by advances in deep learning, transformer architectures, and large language models (LLMs).

This article explores the journey of NLP from understanding to generation, highlighting key technologies, model architectures, applications, and challenges shaping the next era of AI-driven language technologies.

1. Foundations of NLP: Understanding Human Language

1.1 Early NLP Approaches

Early NLP relied heavily on rule-based systems and statistical methods. Approaches included:

- Syntax-based Parsing: Utilizing grammar rules to analyze sentence structure.

- Bag-of-Words Models: Representing text as word frequency vectors without context.

- Hidden Markov Models (HMMs): Applied in part-of-speech tagging and speech recognition.

- TF-IDF and N-grams: Capturing word importance and co-occurrence patterns in documents.

These methods were effective for structured tasks but lacked the ability to capture semantic meaning and contextual nuances.

1.2 Word Embeddings and Contextual Representations

The introduction of word embeddings revolutionized NLP. Techniques like Word2Vec and GloVe mapped words into continuous vector spaces, capturing semantic relationships (e.g., “king” – “man” + “woman” ≈ “queen”).

Later, contextual embeddings from models like ELMo and BERT enabled NLP systems to account for word meaning in context, allowing for:

- Disambiguation of polysemous words

- Improved performance on text classification, question answering, and sentiment analysis

- Transfer learning across multiple NLP tasks

This was a critical step in bridging the gap between basic understanding and the capability for text generation.

2. The Transformer Revolution

2.1 Introduction of Transformers

The 2017 paper “Attention Is All You Need” introduced the transformer architecture, which became the backbone of modern NLP. Key features include:

- Self-Attention Mechanisms: Capturing dependencies between all words in a sentence, regardless of distance.

- Parallel Processing: Unlike recurrent models, transformers allow simultaneous processing of entire sequences.

- Scalability: Enabling the training of massive models with billions of parameters.

Transformers revolutionized NLP by providing the capacity to model both language understanding and generation tasks efficiently.

2.2 Pretraining and Fine-Tuning Paradigm

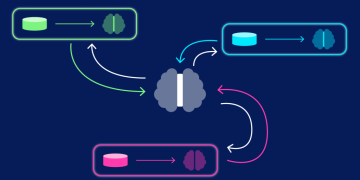

Large-scale pretraining on vast text corpora followed by fine-tuning on specific tasks became a dominant paradigm:

- Pretraining: Models learn general language patterns, grammar, and knowledge from massive datasets.

- Fine-tuning: Models adapt to specific tasks like sentiment analysis, summarization, or dialogue systems.

This approach has led to state-of-the-art performance on benchmarks such as GLUE, SQuAD, and SuperGLUE.

3. From Understanding to Generation: Large Language Models

3.1 Emergence of Generative Models

While early NLP focused on understanding, Generative Pretrained Transformers (GPT) demonstrated that language models could produce coherent, human-like text.

Key features of generative models:

- Contextual Coherence: Ability to generate multi-sentence or multi-paragraph content maintaining logical flow.

- Task Adaptability: Models can perform summarization, translation, question answering, and creative writing.

- Zero-shot and Few-shot Learning: Capable of performing tasks with minimal examples due to extensive pretraining.

Large language models like GPT-4, Claude, and LLaMA illustrate how NLP has expanded from predictive understanding to generative intelligence.

3.2 Fine-Tuning vs. Instruction-Tuning

Generative AI relies on techniques to align models with human preferences:

- Fine-Tuning: Adapting pretrained models to a specific domain or dataset.

- Instruction-Tuning: Teaching models to follow natural language instructions, improving usability in conversational AI.

- Reinforcement Learning with Human Feedback (RLHF): Optimizes outputs based on human evaluation, enhancing safety and alignment.

These methods ensure that AI-generated content is coherent, relevant, and aligned with user intent.

4. Applications of Generative NLP

4.1 Conversational AI

Chatbots and virtual assistants leverage generative NLP for:

- Customer support and troubleshooting

- Personalized recommendations and engagement

- Interactive learning and tutoring

Modern AI models can maintain context over long conversations and adapt responses dynamically.

4.2 Content Generation

Generative models are used for:

- Article writing and summarization

- Creative writing, poetry, and storytelling

- Marketing copy generation

- Code generation in software development (e.g., GitHub Copilot, CodeLlama)

These applications are transforming content creation workflows, reducing time and effort while maintaining quality.

4.3 Multimodal NLP

Recent advancements integrate NLP with other modalities:

- Text-to-Image Generation: Models like DALL-E and Imagen generate images from text prompts.

- Text-to-Audio/Video: Generative AI can produce speech, music, and even animated content.

- Cross-lingual Generation: Models generate translations and summaries across multiple languages, bridging communication gaps.

Multimodal AI demonstrates the synergy between language understanding and generation, enabling richer AI experiences.

5. Technical Challenges in Generative NLP

5.1 Bias and Ethical Concerns

Generative AI models inherit biases from training data, which can manifest as:

- Gender, racial, or cultural stereotypes

- Misinformation or hallucinated content

- Sensitive or harmful outputs

Addressing these challenges requires dataset curation, bias mitigation, and human oversight.

5.2 Computation and Energy Costs

Training large generative models consumes significant resources:

- Exascale GPU clusters for training

- High energy consumption and carbon footprint

- Optimization techniques like mixed-precision training and model pruning mitigate costs but do not eliminate them entirely

Sustainable AI practices are increasingly critical for the NLP field.

5.3 Evaluation and Reliability

Evaluating generative models is inherently difficult due to subjective quality metrics. Common strategies include:

- BLEU, ROUGE, and METEOR for translation and summarization

- Human evaluation for creativity and coherence

- Automated scoring for factual consistency

Research continues on developing robust and objective evaluation frameworks.

6. The Future of NLP: Beyond Text

6.1 Foundation Models and Generalization

Foundation models, trained on massive, diverse datasets, enable general-purpose NLP capabilities, reducing the need for task-specific models.

Future trends include:

- Cross-domain generalization: Models that can handle text, code, images, and audio seamlessly

- Few-shot learning at scale: Further reducing reliance on labeled datasets

6.2 Human-AI Collaboration

Generative NLP is evolving into collaborative AI, assisting humans in creative, technical, and professional tasks. Applications include:

- Co-authoring reports and research papers

- Assisting software development and debugging

- Personalized tutoring and knowledge synthesis

6.3 Regulatory and Governance Considerations

As NLP capabilities grow, so does the need for responsible AI governance:

- Data privacy and protection regulations

- Guidelines for safe deployment of AI in public-facing applications

- Mechanisms to monitor and mitigate harmful outputs

Governance ensures that AI generation complements human expertise safely and ethically.

Conclusion

Natural Language Processing has undergone a profound transformation, evolving from basic language understanding to advanced generative capabilities. With the advent of transformers, large language models, and multimodal AI, NLP technologies are now capable of creating human-like text, multimedia content, and code, reshaping industries from content creation to healthcare, finance, and education.

While challenges in bias, computation, and ethical deployment remain, the future of NLP is marked by integration, generalization, and collaboration. Generative AI is no longer a supplement to human communication—it is becoming an essential tool for creativity, decision-making, and problem-solving.

The shift from understanding to generation represents not just a technological evolution, but a paradigm shift in how humans interact with machines, unlocking new possibilities for innovation, efficiency, and global communication.