Introduction

For much of its recent popular history, artificial intelligence (AI) has been synonymous with text: chatbots that converse fluently, large language models that summarize documents, generate code, translate languages, and write essays indistinguishable from those of humans. While these achievements are remarkable, they represent only one dimension of intelligence. Human intelligence is not text-centric; it is grounded in perception, action, and interaction with the physical world.

Today, AI is undergoing a profound transformation. No longer confined to text generation, it is rapidly expanding into vision, multimodal perception, embodied reasoning, and physical robotics. This shift marks a transition from disembodied intelligence—systems that operate purely in symbolic or textual spaces—toward integrated, embodied AI systems capable of seeing, hearing, touching, reasoning, and acting in real environments.

This article explores this transition in depth. We examine the technological foundations of multimodal AI, the rise of perception-driven models, the convergence of AI and robotics, and the implications of embedding intelligence into physical agents. We also discuss challenges, ethical considerations, and future directions, arguing that the next era of AI will be defined not by better text alone, but by holistic intelligence grounded in the physical world.

1. From Language-Centric AI to Multimodal Intelligence

1.1 The Limits of Text-Only Intelligence

Large language models (LLMs) have demonstrated that statistical learning over massive textual corpora can yield powerful reasoning, abstraction, and generalization capabilities. However, text-only intelligence has inherent limitations:

- Lack of grounding: Words refer to the world, but text alone does not provide direct sensory grounding.

- Fragile world models: Without perception, AI systems rely on secondhand descriptions of reality.

- No physical agency: Text-based systems cannot act directly on the environment.

Human cognition, by contrast, emerges from continuous interaction between perception, action, and reasoning. Language is layered on top of sensorimotor experience, not isolated from it.

1.2 The Emergence of Multimodal AI

Multimodal AI seeks to bridge this gap by integrating multiple forms of input and output, such as:

- Vision (images, video)

- Audio (speech, environmental sound)

- Text (language, symbols)

- Sensor data (touch, force, proprioception)

- Action (movement, manipulation)

Instead of processing each modality independently, modern systems learn shared representations that align vision, language, and action in a unified latent space. This alignment allows AI to reason across modalities—describing what it sees, acting on verbal instructions, or explaining its physical actions in natural language.

2. Vision as a Foundation of Intelligence

2.1 Computer Vision Beyond Recognition

Early computer vision systems focused on narrow tasks such as object classification or face detection. Today’s vision models are far more capable, addressing complex problems including:

- Scene understanding and semantic segmentation

- 3D reconstruction and depth estimation

- Motion prediction and visual tracking

- Visual reasoning and relational understanding

Vision is no longer just about recognizing objects; it is about understanding environments.

2.2 Vision-Language Models

One of the most significant advances in recent years is the development of vision-language models (VLMs). These models learn joint representations of images and text, enabling capabilities such as:

- Image captioning and visual storytelling

- Visual question answering

- Instruction-following based on visual context

- Cross-modal retrieval (text-to-image, image-to-text)

By aligning pixels with words, VLMs enable AI systems to “talk about what they see” and “see what they talk about,” a crucial step toward human-like understanding.

3. Perception: From Passive Sensing to Active Understanding

3.1 Perception as an Active Process

In biological systems, perception is not passive data collection—it is an active process driven by goals, attention, and action. Modern AI increasingly mirrors this approach:

- Active vision systems move cameras to reduce uncertainty

- Embodied agents explore environments to learn affordances

- Attention mechanisms prioritize task-relevant sensory input

This shift from static perception to active sensing allows AI to build richer and more robust world models.

3.2 Multisensory Integration

Human perception integrates multiple senses seamlessly. Similarly, advanced AI systems combine:

- Vision and audio for audiovisual understanding

- Vision and touch for object manipulation

- Proprioception and force sensing for motor control

Multisensory integration improves robustness, especially in real-world conditions where any single sensor may be noisy or incomplete.

4. Embodied AI: Intelligence with a Physical Body

4.1 What Is Embodied AI?

Embodied AI refers to intelligent systems that:

- Exist in a physical or simulated body

- Perceive the environment through sensors

- Act on the environment through effectors

- Learn from interaction and feedback

Examples include mobile robots, robotic arms, autonomous vehicles, and humanoid robots.

4.2 Why Embodiment Matters

Embodiment provides three critical advantages:

- Grounding: Concepts are tied to physical experience.

- Causality: Actions produce observable effects, enabling causal learning.

- Adaptation: Agents learn by trial, error, and exploration.

Without embodiment, AI may excel at abstract reasoning but struggle with common-sense physical tasks that humans find trivial.

5. The Convergence of AI and Robotics

5.1 From Rule-Based Robots to Learning-Based Systems

Traditional robots relied on:

- Predefined rules

- Precise environment models

- Structured, predictable settings

Modern AI-driven robots instead leverage:

- Deep learning for perception

- Reinforcement learning for control

- Foundation models for generalization

This transition enables robots to operate in unstructured, dynamic environments such as homes, hospitals, and warehouses.

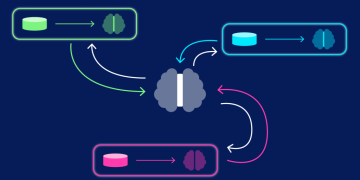

5.2 Foundation Models for Robotics

A key trend is the application of large foundation models—originally developed for language and vision—to robotics. These models:

- Generalize across tasks

- Learn from diverse datasets

- Enable zero-shot or few-shot learning

By conditioning robotic behavior on language and perception, robots can follow high-level instructions without task-specific programming.

6. Learning Through Interaction and Simulation

6.1 Reinforcement Learning in the Real World

Reinforcement learning (RL) allows agents to learn policies through trial and error. In robotics, RL faces challenges such as:

- Sample inefficiency

- Safety risks

- Hardware wear and cost

To address this, researchers increasingly rely on simulation-to-reality (sim-to-real) transfer.

6.2 Digital Twins and Simulated Environments

Simulated environments provide:

- Scalable data generation

- Safe experimentation

- Rapid iteration

When combined with domain randomization and real-world fine-tuning, simulation-trained models can generalize effectively to physical systems.

7. Human-Robot Interaction and Social Intelligence

7.1 Communication Beyond Text

As robots enter human environments, they must understand and express:

- Natural language

- Gestures and body language

- Social norms and intent

This requires integrating perception, language, and action in real time.

7.2 Trust, Transparency, and Explainability

Human acceptance of AI-driven robots depends on:

- Predictable behavior

- Clear communication

- Explainable decision-making

Multimodal AI can help by enabling robots to explain actions verbally, visually, or through demonstration.

8. Applications Across Industries

8.1 Healthcare and Assistive Robotics

In healthcare, embodied AI enables:

- Surgical assistance with visual precision

- Rehabilitation robots that adapt to patients

- Elderly care robots providing physical and social support

These systems combine perception, reasoning, and safe physical interaction.

8.2 Manufacturing and Logistics

AI-powered robots transform factories and warehouses by:

- Adapting to variable objects and layouts

- Collaborating safely with humans

- Optimizing workflows through perception-driven decision-making

8.3 Autonomous Vehicles and Drones

Autonomous systems rely heavily on:

- Visual perception

- Sensor fusion

- Real-time decision-making

Their success illustrates the power of integrated AI systems operating in complex physical environments.

9. Ethical, Safety, and Societal Considerations

9.1 Safety in Embodied AI

When AI systems act in the physical world, errors can cause real harm. Key concerns include:

- Robustness to edge cases

- Safe exploration and learning

- Fail-safe mechanisms

Safety must be a foundational design principle, not an afterthought.

9.2 Bias, Accountability, and Control

Embodied AI inherits biases from data and design choices. Moreover, assigning responsibility for autonomous actions raises complex legal and ethical questions. Transparent governance frameworks are essential as AI systems gain physical agency.

10. The Future: Toward General-Purpose Embodied Intelligence

10.1 From Narrow Skills to General Capability

The long-term vision of AI research is not isolated systems for specific tasks, but general-purpose embodied agents that can:

- Learn continuously

- Transfer knowledge across domains

- Collaborate with humans naturally

Such systems would represent a qualitative leap in artificial intelligence.

10.2 Co-Evolution of Hardware and Intelligence

Progress will depend on the co-design of:

- Intelligent algorithms

- Advanced sensors

- Adaptive, energy-efficient hardware

Soft robotics, neuromorphic sensors, and bio-inspired designs will play an increasing role.

Conclusion

AI is undergoing a fundamental evolution. No longer confined to generating text, it is expanding into vision, perception, and embodied robotics—domains that anchor intelligence in the physical world. This transition marks a shift from abstract symbol manipulation to grounded, interactive, and integrated intelligence.

As multimodal models unify language, vision, and action, and as robots learn through interaction with real environments, the boundary between digital intelligence and physical agency continues to blur. The future of AI will not be defined solely by what machines can say, but by what they can see, understand, and do.

In embracing this broader conception of intelligence, we move closer to AI systems that are not only more capable, but also more aligned with the way humans perceive, learn, and act in the world.