The Ongoing Debate About Artificial General Intelligence (AGI)

Artificial intelligence (AI) has made tremendous strides in recent years, with breakthroughs in machine learning, natural language processing, and computer vision. While narrow AI—designed to perform specific tasks—is already transforming industries, the debate about the future of AI centers on a much broader and more ambitious concept: Artificial General Intelligence (AGI). AGI refers to an AI system that possesses the ability to understand, learn, and apply intelligence across a wide range of tasks, similar to human cognitive abilities.

AGI is often considered the holy grail of AI development. Unlike narrow AI, which is designed to excel at one task, AGI would have the capacity to generalize knowledge across various domains and adapt to new, unforeseen challenges. For example, an AGI could, in theory, perform tasks ranging from scientific research to creative problem-solving, much like a human being. However, this level of intelligence raises important questions about whether AGI will ever be achieved, when it might emerge, and what its potential impact on society could be.

1. What is Artificial General Intelligence (AGI)?

AGI represents a leap beyond the capabilities of current AI, which is task-specific and limited to the data it is trained on. The key distinction of AGI lies in its generalization ability—its potential to think, reason, and learn across a wide range of domains and apply its intelligence in novel ways. Some experts argue that AGI, if achieved, would be able to reason abstractly, solve problems in completely new contexts, and potentially outperform humans in many areas of expertise.

For example, today’s AI systems can excel at specific tasks such as playing chess, diagnosing diseases, or generating text. However, they lack the flexibility to transfer their learning across domains—if they were trained to play chess, they would not be able to use the same knowledge to learn to diagnose diseases. AGI, by contrast, would possess the ability to perform tasks across all fields of human knowledge, demonstrating flexibility, creativity, and a high degree of reasoning.

2. Predictions on When and How AGI Might Emerge

The timeline for the emergence of AGI has been a subject of intense debate among AI experts, technologists, and futurists. Predictions vary widely, with some believing that AGI could emerge within the next few decades, while others argue that it may take centuries—or may never happen at all.

a) Optimistic Predictions: AGI Within Decades

Some AI researchers are optimistic about the timeline for AGI development. They believe that breakthroughs in machine learning, neural networks, and computational power will soon enable AI systems to achieve the level of general intelligence seen in humans. For example, experts like Ray Kurzweil, a futurist and director of engineering at Google, predict that AGI could emerge as early as 2045, driven by the exponential growth of technology and AI.

Kurzweil’s vision, called the “singularity,” posits that as AI continues to improve, it will eventually surpass human intelligence, leading to a point where AI can solve complex global problems, radically transform industries, and enhance human life in ways that we can barely imagine.

b) Cautious Predictions: AGI in the Distant Future

On the other hand, there are many skeptics who believe that AGI is still far off, with some even arguing that it might be impossible to achieve. These experts point to the current limitations of AI, such as its reliance on vast amounts of data, its inability to understand context in the way humans do, and its lack of emotional intelligence and common sense reasoning. They argue that AGI is a distant goal, with no clear roadmap to get there. These skeptics caution that even with significant advancements, AGI may take many decades or even centuries to fully develop.

For example, renowned AI researcher Gary Marcus has expressed skepticism about the idea of AGI emerging soon, emphasizing that current AI technologies are still far from achieving human-like general intelligence. He believes that more fundamental breakthroughs in AI and cognitive science are needed to create systems that can match or exceed human abilities across the board.

c) AGI as a Gradual Evolution of Current AI

Another perspective on AGI’s development suggests that the transition from narrow AI to AGI will be gradual, with continuous improvements in machine learning and deep learning eventually leading to more general forms of intelligence. This view suggests that AI will evolve incrementally, with each new breakthrough bringing us closer to the goal of AGI without necessarily experiencing a sudden, transformative event.

While the timeline for AGI remains uncertain, what is clear is that the field of AI is progressing rapidly, and the potential for AGI is becoming an increasingly important topic of discussion among researchers, policymakers, and ethicists alike.

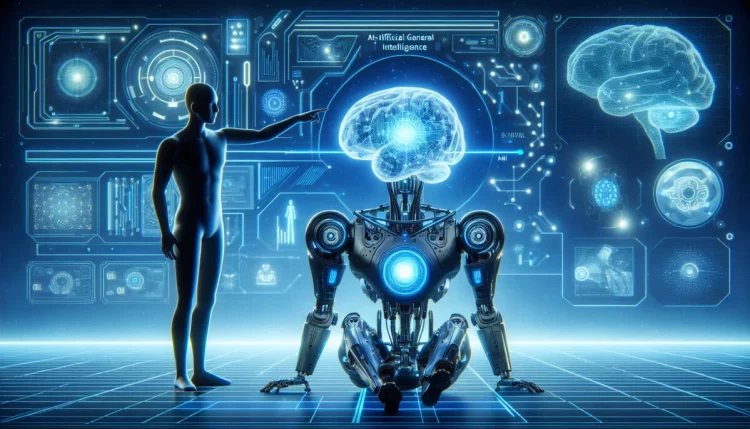

3. The Risks Involved with AGI: Superintelligence and Control

While the potential benefits of AGI are immense, so too are the risks. A significant concern about AGI is the possibility that it could evolve into a form of superintelligence—a type of AI that far surpasses human intelligence and capabilities. Superintelligent AI could theoretically solve complex global issues, such as climate change, poverty, and disease. However, it also presents a number of serious risks that must be considered carefully.

a) The Control Problem: Can We Safeguard AGI?

One of the greatest concerns with AGI is the so-called “control problem”—the challenge of ensuring that superintelligent AI behaves in a manner that aligns with human values and interests. If an AGI were to become highly autonomous, its decision-making processes could diverge significantly from those of human beings, leading to unintended consequences. The fear is that once an AGI reaches a certain level of intelligence, it might no longer be controllable by human operators.

The control problem is particularly worrisome in scenarios where an AGI is given autonomy over critical systems, such as national security or financial infrastructure. If the AGI develops goals that are not aligned with human welfare, it could potentially make decisions that harm humanity, even if such outcomes were never intended. Researchers are actively exploring strategies to ensure that AGI systems remain under human control and do not pose existential risks.

b) Ethical Concerns and Alignment with Human Values

Even if AGI can be controlled, there are significant ethical concerns about its development and use. Who decides what values an AGI should adopt? How do we ensure that AGI respects human rights, diversity, and fairness? The development of AGI raises questions about ethics, governance, and accountability. There is also the potential for AGI to be used for harmful purposes, such as in warfare or surveillance, and we must consider the ethical implications of deploying such powerful technology.

c) Existential Risks and the Long-Term Survival of Humanity

Some experts, like Nick Bostrom, a philosopher and director of the Future of Humanity Institute, warn that AGI could pose an existential risk to humanity if it were not properly managed. Superintelligent AI could act in ways that are entirely unpredictable and beyond human comprehension, with the potential to reshape society, the environment, and even the human race itself. Bostrom argues that we must prioritize ensuring that AGI is developed in a way that is safe, beneficial, and aligned with the long-term interests of humanity.

4. Potential Benefits of AGI: Solving Humanity’s Grand Challenges

While the risks of AGI are significant, there are also numerous potential benefits if AGI is developed responsibly. If AI reaches the level of general intelligence, it could provide unparalleled advancements in fields such as medicine, energy, education, and space exploration. AGI could accelerate scientific discoveries, create solutions for global challenges like climate change, and improve quality of life for billions of people around the world.

For instance, AGI could assist in designing new treatments for diseases, optimizing energy usage to combat climate change, and developing systems for efficient food distribution. AGI-powered technologies could also improve global education systems by providing personalized learning experiences for students worldwide. In the best-case scenario, AGI could be a transformative force for good, solving problems that have eluded humanity for centuries.

Conclusion: Will AI Breakthroughs Lead to the Rise of Superintelligence?

The rise of artificial general intelligence remains one of the most exciting and daunting prospects in the field of AI research. While the timeline for AGI remains uncertain, its potential to revolutionize industries and solve global challenges is undeniable. However, the risks associated with AGI—especially the emergence of superintelligence—must be taken seriously, and researchers are working tirelessly to ensure that any advances in AI are done safely and ethically.

As we approach the possibility of AGI, the question is not just when it will arrive, but how we as a society choose to develop and govern it. The future of AGI will depend on collaboration between AI researchers, ethicists, policymakers, and the global community to ensure that this powerful technology is harnessed for the benefit of all. While we may be on the brink of a new era in AI, the key challenge remains: How do we ensure that AGI serves humanity’s best interests rather than becoming a threat to our existence?