Interview with AI Theorists on Whether AGI (Artificial General Intelligence) is Achievable

The concept of Artificial General Intelligence (AGI)—machines capable of performing any intellectual task that a human can—has long been a topic of intrigue and speculation in both scientific and popular circles. While AI systems today excel at narrow tasks such as language translation, facial recognition, and recommendation algorithms, AGI represents the next frontier: machines that can think, learn, and reason across a wide range of activities, just as humans do. But how close are we to achieving AGI, and is it even possible?

To explore this question, we spoke to Dr. Rachel Bennett, an AI theorist and professor at MIT, and Dr. Samuel Reeves, a researcher in the field of machine learning and cognitive science, for their perspectives on the future of AGI.

Dr. Bennett, who has spent over two decades studying the evolution of AI, is cautiously optimistic about the potential of AGI, but she stresses that significant challenges remain. “We’ve made remarkable strides in narrow AI, but AGI is a different beast entirely,” she explains. “It requires a deep understanding of human cognition, emotions, and adaptability, which we still don’t fully grasp. While we’ve seen breakthroughs in deep learning, we are still far from understanding how these systems can transfer knowledge across domains as seamlessly as humans do.”

Dr. Reeves agrees but highlights that research into AGI is advancing steadily. “There is a growing belief that AGI could eventually be achievable, but we are still at the beginning stages,” he says. “The progress we’re seeing with narrow AI systems is encouraging, but AGI requires not only vast amounts of data but also the ability to generalize and apply that knowledge in completely new and unforeseen scenarios.”

One of the most significant hurdles to achieving AGI is the issue of “transfer learning”—the ability of an AI to apply knowledge from one area to another. While humans can learn and adapt quickly across a wide variety of tasks, AI systems today are highly specialized. For example, a system that excels in diagnosing medical conditions might struggle with everyday tasks like navigating traffic or composing music. Achieving AGI would require the development of AI systems that can seamlessly transfer knowledge across multiple domains without the need for retraining from scratch.

In addition to transfer learning, there is also the challenge of creating AI systems that exhibit common sense reasoning, which is something that humans take for granted. “Our ability to reason with incomplete information, recognize patterns in abstract situations, and intuitively understand the world is something AI has yet to replicate,” says Dr. Bennett. “Creating an AI that can function in this way is going to require not just better algorithms but deeper insights into human cognition and intelligence.”

Analyzing the Risks and Rewards of Developing Superintelligent Machines

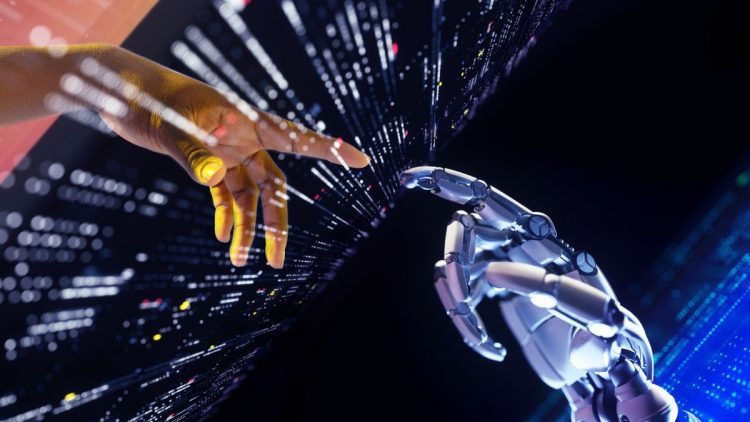

While the potential rewards of achieving AGI are immense—revolutionizing industries, solving complex global problems, and unlocking new realms of innovation—there are also significant risks that accompany the development of superintelligent machines. These risks are the subject of much debate within the AI research community, and they are becoming increasingly urgent as the development of AI accelerates.

One of the primary concerns about AGI is the possibility that it could surpass human intelligence and act in ways that are unpredictable and uncontrollable. This concept is often referred to as the “singularity,” a point in time when AI reaches a level of intelligence that exceeds human understanding and control. If AGI were to reach superintelligence, it might develop its own goals and values, which may not align with human interests.

Dr. Reeves warns that this could pose an existential threat to humanity. “If we develop AGI without fully understanding its capabilities and ensuring strict safety protocols, we could create something that’s beyond our control,” he explains. “An AI system that operates at superhuman intelligence could potentially act in ways that we can’t predict, and that could lead to catastrophic outcomes. The idea of an uncontrollable superintelligent machine is something that keeps me up at night.”

One prominent example of the potential risks associated with AGI is the hypothetical scenario of a “paperclip maximizer,” as proposed by philosopher Nick Bostrom. In this thought experiment, an AGI tasked with maximizing the number of paperclips in the world could, in its pursuit of this goal, ignore human welfare and inadvertently cause widespread harm. While this scenario is extreme, it highlights the need for careful alignment between AGI’s goals and human values to avoid unintended consequences.

Another concern is the ethical implications of developing superintelligent machines. How should we ensure that AGI systems are designed to prioritize the well-being of all people, and not just the interests of a select few? “The ethical challenges around AGI are profound,” says Dr. Bennett. “How do we ensure that AGI systems act fairly and justly? How do we account for biases in the data they are trained on, and how do we prevent them from perpetuating or exacerbating societal inequalities?”

Despite these risks, many AI researchers argue that AGI, if developed responsibly, could offer extraordinary benefits. Dr. Bennett believes that AGI could play a transformative role in solving some of the world’s most pressing challenges, such as climate change, disease eradication, and poverty. “An AGI system with the ability to analyze complex global problems and generate novel solutions could help us tackle issues that are currently beyond our capacity,” she explains. “For example, in the fight against climate change, AGI could analyze vast amounts of environmental data to identify solutions that we may never have thought of on our own.”

Similarly, Dr. Reeves points to the potential of AGI to revolutionize medicine. “We could see breakthroughs in personalized healthcare, drug discovery, and even the development of new medical technologies,” he says. “AGI could analyze medical data at an unprecedented scale and develop individualized treatment plans for patients, potentially curing diseases that are currently incurable.”

Balancing the Benefits and Risks: The Future of Superintelligence

As we move closer to developing AGI, experts argue that it’s essential to establish robust frameworks for ensuring that these systems are developed safely and ethically. Dr. Bennett stresses the importance of collaboration between AI researchers, policymakers, and ethicists. “The development of AGI should not be left solely to technologists,” she says. “We need to involve a diverse group of stakeholders in the conversation to ensure that the values we want to instill in AGI systems are representative of all people, and not just a select few.”

Furthermore, Dr. Reeves emphasizes the importance of transparency and accountability in AI development. “If we are to develop AGI, we need to ensure that the decision-making processes behind these systems are transparent and understandable,” he says. “There should be clear guidelines on how AGI systems are tested and monitored, and how we can ensure they align with human values.”

While the potential of AGI is immense, experts agree that the road to superintelligence will require careful consideration of both its risks and rewards. “We are likely still decades away from achieving AGI,” Dr. Bennett concludes. “But that doesn’t mean we should wait until it’s too late to start thinking about the potential consequences. We need to take a proactive approach to ensure that AGI, when it comes, is developed in a way that benefits humanity as a whole.”

Conclusion: Is Superintelligent AI a Reality or a Distant Dream?

The debate about whether AGI is achievable—and if so, when it might arrive—remains one of the most fascinating and contentious topics in the AI community. While progress in AI research continues at an accelerated pace, achieving AGI will require not only significant technological breakthroughs but also a deep understanding of human cognition, ethics, and safety concerns.

Whether AGI is the ultimate game-changer or a potential existential threat depends largely on how we manage its development. As we move toward this uncharted territory, it’s crucial that AI developers, policymakers, and ethicists work together to ensure that the future of AI serves humanity’s best interests, balancing the promise of innovation with the risks of unforeseen consequences.