Introduction

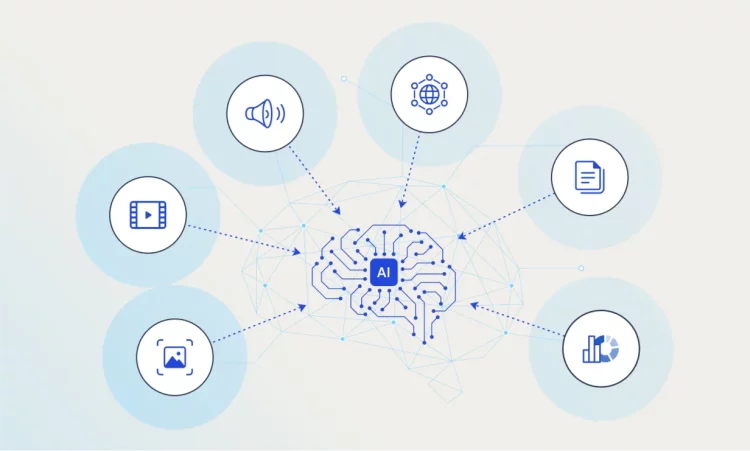

The rapid advancement of artificial intelligence (AI) has led to the development of increasingly sophisticated models that can handle complex data tasks across a variety of domains. Traditionally, AI models have been designed to work with a single type of data, such as text, images, or audio. However, as the real world consists of multifaceted information, there is an emerging need for AI systems that can process and understand multiple forms of data simultaneously—this is where multimodal AI comes in.

Multimodal AI refers to the ability of a system to process and integrate information from multiple data sources or modalities, such as text, images, audio, and video, to create a richer, more comprehensive understanding of the data. The ability to combine diverse modalities allows these systems to generate deeper insights, improve decision-making, and perform more complex tasks that were previously challenging for traditional AI systems.

This article delves into the concept of multimodal AI, exploring its components, applications, and the key advancements in the field. We will also address the challenges faced in integrating multimodal data and examine the future potential of multimodal AI in transforming industries such as healthcare, autonomous driving, and customer service.

What is Multimodal AI?

Defining Multimodal AI

At its core, multimodal AI integrates data from different sources (or modalities) to create a unified understanding. For example, a multimodal AI system could combine text data from a document, images from a photograph, and audio from a conversation to improve its comprehension of a situation. By combining multiple types of information, multimodal AI is able to make better inferences, generate more accurate predictions, and handle a broader range of tasks.

Traditional AI models are often specialized in a single modality:

- Text-based models, such as Natural Language Processing (NLP) models, focus on understanding and generating text.

- Computer Vision (CV) models are designed to analyze and interpret visual data (images and video).

- Speech recognition systems convert spoken language into text or understand audio inputs.

Multimodal AI, on the other hand, combines these different data streams, allowing for more comprehensive understanding and analysis. It goes beyond the limitations of single-modal systems by enabling the system to reason across diverse types of data, leading to better performance in real-world scenarios where different modalities are often interrelated.

Key Components of Multimodal AI

- Data Fusion: The process of combining information from multiple modalities into a single unified model. This involves alignment (ensuring that data from different sources is correctly matched), synchronization (ensuring that data streams are aligned in time), and integration (combining features from different sources).

- Feature Extraction: The ability to extract meaningful features from each modality (e.g., identifying objects in an image, detecting speech patterns in audio, or understanding sentiment in text) before integrating them into a unified representation.

- Cross-Modality Learning: The process through which models learn how different modalities complement each other. For instance, combining visual cues with textual descriptions can lead to a more complete understanding of a scene or event.

- Modeling and Representation: The AI system must create representations that combine information from various modalities in a meaningful way. This requires deep learning techniques such as neural networks, particularly transformers and convolutional neural networks (CNNs).

How Multimodal AI Works

1. Data Representation and Embeddings

The first step in multimodal AI is to represent each type of data in a form that can be understood by the AI system. In the case of images, for example, a CNN might be used to extract features such as shapes, textures, or objects. For text, Word2Vec or BERT might be used to create word embeddings that capture semantic meaning. For audio, techniques such as Mel-frequency cepstral coefficients (MFCCs) or spectrograms are used to convert raw sound into a feature representation.

Once the data is represented, the system must learn to combine these different representations. This is typically done using a shared latent space, where data from different modalities is mapped into a common space, allowing the model to draw relationships between the various data streams.

2. Fusion Strategies

There are several strategies for integrating multimodal data:

- Early Fusion: Involves merging data from different modalities at the input level. This approach combines raw data or low-level features before the learning process begins. It is computationally intensive but ensures that all data is integrated from the start.

- Late Fusion: Involves training separate models for each modality and then combining their outputs at a later stage. This strategy reduces the complexity of data fusion but may lose some potential correlations between the modalities.

- Hybrid Fusion: A combination of early and late fusion, where certain aspects of the data are fused early, while others are processed separately and combined at a later stage.

3. Multimodal Neural Networks

Deep learning, and in particular, neural networks, play a key role in multimodal AI. These networks can be designed to handle multimodal data by using specialized layers or architectures that can process different types of data in parallel or sequence. For example:

- Multimodal transformers can be used to handle data from multiple sources, such as combining image and text data for tasks like image captioning.

- Multimodal recurrent neural networks (RNNs) are useful when dealing with time-series data from multiple sources, such as video or sensor data.

By using these techniques, multimodal neural networks can extract relevant features from different types of data and fuse them to improve performance in tasks like classification, prediction, and decision-making.

Applications of Multimodal AI

1. Healthcare and Medical Imaging

In healthcare, multimodal AI is proving to be revolutionary. Medical imaging, genomic data, patient records, and clinical notes are often stored in different formats, making it difficult for healthcare professionals to make quick, informed decisions. Multimodal AI can combine these data sources to provide a more comprehensive view of a patient’s condition, leading to better diagnoses and treatment recommendations.

For example, AI systems can combine MRI scans with patient history and genetic information to predict disease progression or recommend personalized treatment plans. By integrating data across modalities, AI systems can offer insights that would be impossible to obtain from any single source alone.

2. Autonomous Vehicles

Autonomous driving relies heavily on multimodal AI. Self-driving cars are equipped with multiple sensors, such as LiDAR, radars, and cameras, which provide different types of data about the environment. A multimodal AI system can integrate these data streams to improve navigation, object detection, and decision-making.

For instance, a camera may identify pedestrians, while a LiDAR sensor can measure their distance from the vehicle. The fusion of these modalities helps the car make more informed decisions, such as when to stop or avoid an obstacle.

3. Customer Service and Virtual Assistants

Virtual assistants like Amazon Alexa, Google Assistant, and Apple Siri are examples of multimodal AI applications. These systems combine speech recognition, natural language processing (NLP), and contextual understanding to respond to user queries.

For example, a multimodal AI system could analyze spoken requests in conjunction with visual cues (e.g., facial expressions or body language) to provide more accurate, context-aware responses. This could improve user experiences, particularly in complex scenarios like customer service, where AI needs to understand both spoken language and emotional tone.

4. Robotics and Human-Robot Interaction

In robotics, multimodal AI is essential for improving human-robot interaction (HRI). Robots are increasingly being used in environments where they must interact with humans, such as in manufacturing, elderly care, or space exploration. By integrating visual, auditory, and sensor data, robots can better understand human gestures, emotions, and speech, enabling more natural and effective interactions.

For instance, robots in elderly care homes can analyze a patient’s facial expression, body language, and voice to gauge their emotional state and respond appropriately, whether through speech, touch, or actions.

Challenges in Multimodal AI

1. Data Alignment and Synchronization

One of the main challenges in multimodal AI is ensuring that data from different modalities is aligned and synchronized. This is especially true for time-sensitive data like video or audio. If the data from different modalities doesn’t align correctly, the AI system may misinterpret the information, leading to inaccurate predictions or decisions.

2. Data Fusion Complexity

Fusing data from multiple sources with varying formats, scales, and structures is a complex task. Developing models that can effectively handle and combine these disparate data types while preserving important information is one of the primary hurdles in multimodal AI research.

3. Scalability and Computational Resources

Multimodal AI systems are computationally intensive due to the need to process large volumes of diverse data. Training multimodal models requires powerful hardware, large datasets, and significant time. This can be a barrier for organizations with limited resources.

4. Ethical and Privacy Concerns

Multimodal AI often involves the use of sensitive data, such as images, audio, and personal information. This raises significant privacy and ethical concerns. Ensuring that data is handled responsibly, transparently, and securely is essential to gaining public trust and ensuring compliance with regulations like the GDPR.

The Future of Multimodal AI

As AI continues to evolve, multimodal systems will play an increasingly important role in enabling machines to better understand and interact with the world. In the future, we can expect more seamless and sophisticated AI-human interactions, enhanced decision-making processes, and AI-powered applications across every sector.

The integration of multiple modalities will also pave the way for innovations like AI-powered diagnostics, real-time language translation, smart cities, and personalized education, all of which will rely on rich, multimodal datasets to function effectively.

As the technology matures, overcoming the current challenges of data fusion, computational complexity, and ethical concerns will be critical to unlocking the full potential of multimodal AI.

Conclusion

Multimodal AI represents the future of intelligent systems, enabling machines to process and understand data from various modalities to perform complex tasks. By integrating text, images, audio, and other forms of data, multimodal AI is transforming industries ranging from healthcare and autonomous vehicles to robotics and customer service.

As we continue to advance in this field, the collaboration of AI researchers, technologists, and policymakers will be essential to overcoming the challenges and ensuring that multimodal AI technologies are developed responsibly and ethically. The next generation of AI systems will not just understand isolated pieces of information—they will have the ability to integrate and reason across diverse data sources, opening up new possibilities for innovation and application across the globe.