Abstract

The Transformer architecture and its self-attention mechanism have revolutionized the field of deep learning, especially in natural language processing (NLP) and machine learning. By enabling models to process sequential data efficiently and capture long-range dependencies, these technologies have dramatically improved the performance of AI systems. However, despite their success, Transformer-based models face challenges related to computational complexity and their ability to represent diverse, intricate relationships within data. This article explores the ongoing efforts to optimize Transformer architectures and self-attention mechanisms, with a focus on their impact on model expressiveness and efficiency. We also delve into the challenges faced by these models and the innovations that are making them more scalable, accurate, and capable of handling complex tasks across various domains.

1. Introduction: The Impact of Transformer Architectures in AI

1.1 The Emergence of Transformer Models

The advent of Transformers in 2017, introduced in the seminal paper “Attention Is All You Need” by Vaswani et al., marked a turning point in the world of deep learning. Transformers, initially designed for natural language processing (NLP) tasks, have since become the backbone of several groundbreaking AI models, including BERT, GPT-3, T5, and BART. Unlike traditional recurrent neural networks (RNNs) and long short-term memory (LSTM) networks, Transformers use a mechanism known as self-attention to handle sequential data.

Self-attention allows a model to weigh the importance of each token in a sequence relative to the others, enabling it to capture long-range dependencies without the limitations of traditional RNNs. This ability to attend to all elements of a sequence in parallel has dramatically increased training efficiency and scalability. Transformers have not only outperformed previous architectures in language understanding but have also been successfully applied to fields like computer vision, audio processing, and even genomics.

However, despite their success, there are still several aspects of the Transformer architecture that need to be optimized to improve its expressiveness and efficiency.

2. The Architecture of Transformer Models

2.1 The Basics of Transformer Networks

At the core of Transformer models lies the self-attention mechanism and the encoder-decoder architecture. The encoder processes input sequences, while the decoder generates output sequences, typically used in tasks like machine translation. The self-attention mechanism enables the model to look at the entire sequence of input tokens and determine which parts of the sequence are most important for each token.

Key Components of a Transformer:

- Input Embeddings: These represent the tokens in a high-dimensional vector space. Word embeddings capture semantic relationships between words, while positional encodings are used to retain information about the order of the tokens in the sequence.

- Self-Attention Mechanism: The self-attention mechanism computes a weighted sum of the values (tokens) in the input sequence, with each token attending to all others based on their relevance.

- Multi-Head Attention: To capture different aspects of relationships between tokens, multiple self-attention mechanisms (heads) are run in parallel, allowing the model to focus on various parts of the input sequence simultaneously.

- Feed-Forward Neural Networks: After the attention layer, each token’s representation is passed through a feed-forward neural network for further processing.

- Layer Normalization and Residual Connections: These are used to stabilize training and ensure that gradients flow effectively through the network.

The encoder-decoder model uses these components, and the attention mechanism allows for efficient parallelization of computations, significantly speeding up training compared to RNN-based models.

2.2 Self-Attention: The Heart of Transformer Models

The self-attention mechanism is a key innovation in Transformers. It works by computing three vectors for each token: Query (Q), Key (K), and Value (V). These vectors are derived from the input tokens and used to compute attention scores, which indicate how much focus a given token should have on other tokens in the sequence.

Steps of Self-Attention:

- Calculating Attention Scores: For each token in the sequence, the dot product between its query and the keys of all tokens is computed. This results in a set of attention scores.

- Softmax Normalization: The attention scores are passed through a softmax function to normalize them, ensuring that they sum to one.

- Weighted Sum: The value vectors are weighted by the normalized attention scores to produce a context-sensitive representation of the input.

This process allows the Transformer model to capture dependencies between tokens, even if they are far apart in the sequence. This is in stark contrast to RNNs, where long-range dependencies are harder to capture due to vanishing gradients.

3. Optimization of Transformer Models

While Transformer models have shown great promise, there are several areas where optimization can improve their performance, efficiency, and expressiveness. As Transformer models grow in size, their computational requirements increase exponentially, leading to challenges related to both scalability and resource efficiency.

3.1 Reducing Computational Complexity: Efficient Transformers

One of the primary challenges with Transformer models is their computational complexity. The original self-attention mechanism has a time complexity of O(n²), where n is the length of the input sequence. This makes Transformers particularly challenging to scale for very long sequences, as the memory and computational cost increase quadratically with sequence length.

Efficient Transformer Variants:

- Linformer: This model reduces the complexity of the self-attention mechanism by using low-rank approximations, making it more efficient for long sequences.

- Longformer: Longformer introduces sliding window attention, which computes attention over a local window of tokens instead of the entire sequence, reducing the overall computational burden.

- Reformer: Reformer leverages locality-sensitive hashing and reversible layers to reduce the memory and time complexity of the self-attention mechanism, making it more scalable.

- Sparse Transformer: Sparse attention reduces the quadratic complexity by using sparsity patterns, enabling models to attend to only a subset of the sequence, drastically improving efficiency without sacrificing accuracy.

These optimizations enable Transformer models to handle longer sequences with lower resource consumption while maintaining high accuracy in tasks such as language modeling and document classification.

3.2 Enhancing Expressiveness with Hybrid Architectures

To further enhance the expressiveness of Transformer models, researchers have begun combining Transformers with other neural architectures to leverage their strengths in different types of data representation.

Hybrid Approaches:

- Transformer + Convolutional Networks: Some models combine the power of Transformers for sequence processing with convolutional networks (CNNs) for capturing spatial relationships. This is particularly useful in computer vision, where tasks like image classification or object detection benefit from both local feature extraction (via CNNs) and long-range dependencies (via Transformers).

- Vision Transformers (ViT): ViT applies the Transformer model directly to image patches, treating them as sequences of tokens, thereby achieving state-of-the-art performance in visual tasks.

- Graph Neural Networks (GNNs): In cases where input data is structured as graphs (e.g., social networks, molecular structures), combining Transformers with GNNs can help the model capture both global dependencies (via self-attention) and local graph relationships (via message passing).

These hybrid architectures are designed to address the diverse and often complex nature of real-world data, improving the model’s ability to generalize across different domains.

3.3 Advanced Self-Attention Mechanisms: Expanding Model Capability

While the traditional self-attention mechanism has proven to be highly effective, various improvements have been proposed to enhance its ability to capture more nuanced relationships in data.

Improved Self-Attention Techniques:

- Relative Positional Encoding: The standard Transformer uses absolute positional encoding to represent the position of tokens in a sequence. However, this approach does not generalize well to sequences of varying lengths. Relative positional encoding allows the model to better capture the relationship between tokens regardless of their position in the sequence.

- Multi-Scale Attention: Some Transformer models employ multi-scale attention to capture dependencies at different scales or granularities, improving their ability to handle tasks involving hierarchical structures, such as parsing or machine translation.

By optimizing self-attention in this way, Transformers can more effectively capture the intricate relationships present in complex data, improving their performance in a variety of tasks.

4. Applications and Future Directions

The optimization of Transformer and self-attention architectures has had a profound impact on a wide range of AI applications, especially in fields like natural language processing, computer vision, speech recognition, and reinforcement learning.

4.1 Natural Language Processing (NLP)

Transformers have become the foundation for modern NLP models, powering systems like Google BERT, OpenAI GPT, and T5. These models have drastically improved the ability of machines to understand and generate human language, leading to breakthroughs in tasks such as question answering, text summarization, language translation, and sentiment analysis.

4.2 Computer Vision

In computer vision, the Vision Transformer (ViT) has demonstrated that Transformers can outperform CNNs in tasks like image classification, object detection, and image segmentation. The ability to model long-range dependencies between pixels allows Transformer-based models to capture more contextual information, leading to higher accuracy in complex visual tasks.

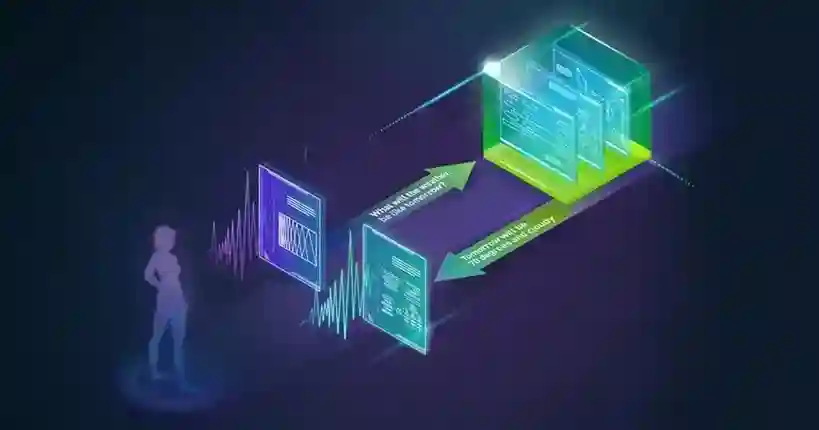

4.3 Speech and Audio Processing

Transformers have also shown great promise in speech processing tasks such as speech recognition, voice synthesis, and emotion detection. By capturing long-range dependencies in audio sequences, Transformers can more accurately model the temporal structure of speech, improving performance in noisy environments.

4.4 Reinforcement Learning and Decision Making

Transformers are increasingly being used in reinforcement learning (RL) to improve decision-making in dynamic environments. Self-attention mechanisms enable models to process sequences of actions and states efficiently, making them ideal for tasks that require long-term planning, such as robotic control or multi-agent environments.

5. Conclusion

The Transformer architecture, with its self-attention mechanism, has revolutionized deep learning by enabling models to handle complex tasks that require an understanding of long-range dependencies. Ongoing research to optimize Transformers—whether through reducing computational complexity, improving expressiveness, or integrating with other architectures—continues to enhance their effectiveness and scalability.

As AI continues to evolve, Transformers will likely remain central to advancements in natural language processing, computer vision, speech recognition, and beyond. By addressing current challenges, such as computational inefficiency and scalability, these optimized models will help bridge the gap between human cognitive capabilities and artificial intelligence, opening up new possibilities in a wide range of domains.