Introduction: The Challenge of Labeled Data in AI Training

In recent years, machine learning (ML) and artificial intelligence (AI) have become integral to numerous industries, from healthcare and finance to autonomous driving and natural language processing. However, despite the rapid progress, one of the fundamental challenges in building robust AI systems remains the dependence on labeled data. Traditional supervised learning techniques, which require large amounts of manually labeled data, have limitations in terms of scalability, data acquisition, and cost.

Moreover, with the increasing complexity of AI models, there’s a growing concern about the generalization ability of models, especially when trained on limited or biased data. A model trained on a specific dataset may perform well on the test data but fail to generalize effectively to unseen data from different distributions. Therefore, improving model generalization and reducing the need for labeled data have become central problems in AI research.

To address these challenges, innovative training paradigms like self-supervised learning (SSL) and federated learning (FL) are emerging as powerful solutions. These new methods not only reduce the reliance on labeled data but also improve the robustness and generalization of machine learning models, making them more effective in real-world applications.

This article explores self-supervised learning, federated learning, and other emerging training methods, focusing on their principles, applications, and their potential to transform the future of AI.

1. The Importance of Labeled Data in Traditional Machine Learning

1.1 The High Cost of Labeled Data

In traditional supervised learning, training a model requires large amounts of labeled data. These labels are typically created by humans, either through manual annotation or by using pre-existing labeled datasets. For example, to train an image classification model, each image in the dataset must be labeled with the correct class (e.g., “dog,” “cat,” “car”).

However, obtaining these labels is often expensive and time-consuming, especially in industries like healthcare and autonomous driving, where expert knowledge is needed for accurate labeling. Medical images, for example, require radiologists to annotate each image, a process that takes a considerable amount of time and effort.

1.2 Limitations of Labeled Data for Model Generalization

Even when large labeled datasets are available, there is no guarantee that the model will generalize well to new, unseen data. Models trained on specific datasets may overfit to the training data, meaning they perform well on familiar examples but fail when exposed to different distributions, environments, or contexts.

This phenomenon is particularly problematic when the labeled data is biased or not representative of the real-world distribution. A model trained on biased or non-representative data will likely perform poorly when deployed in real-world settings.

2. Self-Supervised Learning: Reducing Dependency on Labeled Data

2.1 What is Self-Supervised Learning (SSL)?

Self-supervised learning is a class of machine learning techniques that enables a model to learn useful representations from unlabeled data. The key idea behind SSL is to generate pseudo-labels from the data itself, eliminating the need for manual annotation. In SSL, the model is trained to predict parts of the data from other parts of the same data, effectively learning to understand the structure of the data without any explicit supervision.

For example, in natural language processing (NLP), a common SSL approach is masked language modeling (MLM), where a portion of the text is masked, and the model must predict the missing words. This allows the model to learn meaningful representations of language without relying on labeled data.

2.2 How SSL Works: Pretext and Downstream Tasks

In SSL, there are two main tasks: pretext tasks and downstream tasks.

- Pretext Tasks: These are self-supervised tasks that the model is trained on, typically generated by manipulating the raw data. For instance, in image recognition, a pretext task might involve image rotation prediction, where the model is trained to predict the rotation angle of an image. In NLP, a pretext task might involve predicting missing words in a sentence (as mentioned earlier with MLM).

- Downstream Tasks: Once the model has learned useful representations through the pretext task, these representations are transferred to downstream tasks like classification, regression, or other supervised learning tasks. The learned representations can be used as features for models in specific applications, such as object detection or sentiment analysis.

2.3 Applications of Self-Supervised Learning

SSL has found applications across various domains, including:

- Computer Vision: SSL has revolutionized the field of computer vision by enabling models to learn from vast amounts of unlabeled image data. Techniques such as contrastive learning and self-supervised image generation allow models to learn rich visual features, which can then be used for tasks like object detection, segmentation, and image captioning.

- Natural Language Processing (NLP): SSL has significantly advanced NLP models. Pretraining language models like BERT and GPT using masked word prediction tasks has led to breakthroughs in tasks like question answering, text summarization, and machine translation, all with minimal labeled data.

- Audio Processing: SSL has also been applied to speech recognition and audio classification. For example, a model can learn to predict missing parts of audio signals or generate embeddings for audio data, which can be used in downstream tasks such as speech-to-text.

2.4 Benefits of Self-Supervised Learning

- Reduced Labeling Effort: SSL significantly reduces the need for labeled data, as it leverages vast amounts of unlabeled data to train models. This is particularly useful in fields where labeled data is scarce or expensive to obtain.

- Improved Model Generalization: By learning from a more diverse set of data, SSL models tend to generalize better to unseen examples, as they learn a broader set of representations. This leads to improved robustness and adaptability.

- Pretraining for Specific Tasks: SSL enables the use of pre-trained models for downstream tasks. For example, a model pre-trained on large-scale unlabeled data can be fine-tuned on smaller labeled datasets, reducing the time and effort required for task-specific training.

3. Federated Learning: Collaborative Learning with Privacy Preservation

3.1 What is Federated Learning (FL)?

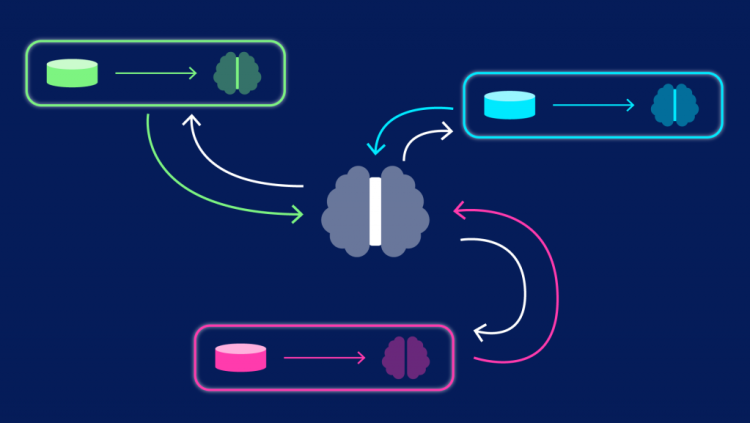

Federated learning is a decentralized machine learning approach that allows multiple devices (often mobile or edge devices) to collaboratively train a shared model without sharing their local data. Instead of collecting data in a central server, the model is sent to each device, and the device updates the model with its local data. Only the updated model parameters (weights) are shared with the server, ensuring that raw data never leaves the device.

3.2 How Federated Learning Works

In federated learning, a central server coordinates the training process across all participating devices:

- Model Initialization: A global model is initialized on the central server.

- Local Training: Each device trains the model locally using its own data.

- Model Aggregation: After training, each device sends the updated model parameters back to the server.

- Global Update: The server aggregates the updates from all devices to create a new global model, which is then sent back to the devices for further training.

This process repeats iteratively until the model converges.

3.3 Applications of Federated Learning

Federated learning is particularly useful in scenarios where data privacy is a concern or where data is distributed across multiple devices. Some key applications include:

- Mobile Devices: Companies like Google have implemented federated learning for keyboard prediction (e.g., Gboard), where the model is trained on users’ local data without compromising their privacy.

- Healthcare: Federated learning can be used to train machine learning models on medical data from hospitals or clinics while keeping sensitive patient information private.

- Autonomous Vehicles: In the automotive industry, federated learning allows vehicles to improve their driving models by sharing insights with a central server without transmitting sensitive driving data.

3.4 Benefits of Federated Learning

- Data Privacy and Security: Since data remains on the local device and only model updates are shared, federated learning helps ensure data privacy and compliance with privacy regulations (such as GDPR).

- Reduced Data Transfer Costs: By limiting data transfer to model parameters, federated learning reduces the need for large-scale data storage and bandwidth usage.

- Scalability: FL enables collaborative learning across a vast number of devices without the need for central data collection, making it scalable across millions of devices.

4. Other Emerging Methods for Reducing Labeled Data Dependence

4.1 Transfer Learning

Transfer learning is a technique where a model trained on one task is adapted for use on a different but related task. Instead of starting from scratch, the model leverages pre-learned representations from a similar domain to jump-start training on the target task. This reduces the amount of labeled data required for fine-tuning, as the model already has a general understanding of features and patterns.

4.2 Semi-Supervised Learning

Semi-supervised learning is a hybrid approach that uses both labeled and unlabeled data. A small amount of labeled data is used to guide the learning process, while the model also learns from the vast amounts of unlabeled data. This reduces the reliance on labeled data and improves the model’s ability to generalize.

Conclusion: The Future of AI Training Paradigms

Emerging training methods such as self-supervised learning and federated learning are playing a pivotal role in addressing the key challenges facing modern AI development: reducing the reliance on labeled data and improving model generalization. These techniques not only make AI more accessible and scalable but also contribute to the development of models that are more robust, adaptable, and privacy-conscious.

As AI continues to evolve, it is likely that these training paradigms will become even more integrated into mainstream applications, unlocking new capabilities and opening the door to more efficient, privacy-preserving, and generalizable AI models. The future of AI will not only be defined by its algorithms but also by how we train and scale them in an increasingly data-constrained world.