Introduction

In the dynamic and rapidly evolving realm of artificial intelligence (AI), innovation is both relentless and transformative. Over the past decade, breakthroughs in neural network architectures, training algorithms, and hardware accelerators have reshaped industries ranging from healthcare and finance to autonomous systems and scientific research. At the epicenter of this innovation landscape stands Nvidia — a company that, since its inception, has fundamentally advanced how computational workloads are executed, particularly in AI and high‑performance computing (HPC).

Recently, Nvidia unveiled its next generation of AI hardware and accompanying models, signaling a decisive leap forward in the capabilities of AI systems. This new generation promises unprecedented performance improvements, energy efficiency gains, and scalability — attributes that are increasingly essential as AI workloads grow in size and complexity. In this article, we provide a comprehensive and professional examination of Nvidia’s latest AI hardware and model innovations, exploring architectural enhancements, strategic implications, real‑world applications, and the broader context of AI infrastructure evolution.

1. The Imperative for Next‑Generation AI Hardware

1.1 The Growth of AI Workloads

AI workloads have grown exponentially in scale and computational demand. Modern deep learning models — particularly large language models (LLMs), generative models, and multi‑modal neural networks — require vast amounts of matrix multiplication, tensor operations, and high‑speed memory access. Training OpenAI’s GPT family, Google’s PaLM series, or similar LLMs involves billions to trillions of parameters, demanding hardware capable of supporting massive parallelism, high memory bandwidth, and efficient interconnects.

1.2 Bottlenecks in Traditional Architectures

Traditional CPU‑centric computing systems are ill‑suited for such workloads due to limited parallel processing capabilities. Early GPU accelerators offered significant improvements, but as models scaled, even GPU clusters faced challenges such as:

- Communication overhead between nodes during distributed training.

- Thermal limitations when operating at high throughput.

- Energy costs associated with sustained high‑performance operation.

- Memory constraints for storing and processing large model weights and intermediate activations.

These bottlenecks necessitated a new class of hardware that could holistically address the computational, memory, and networking demands of next‑generation AI workloads.

2. Nvidia’s Next‑Generation AI Hardware: An Overview

2.1 Architectural Innovations

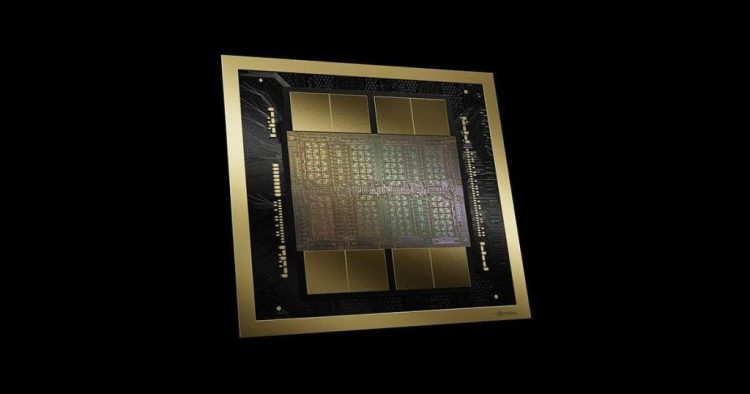

At the core of Nvidia’s next‑generation AI hardware lies architectural innovation designed to amplify computational throughput while minimizing latency and energy expenditure. Key components include:

- Advanced Tensor Cores: Optimized for AI‑centric operations such as mixed‑precision matrix math, tensor cores accelerate deep learning training and inference beyond the capabilities of traditional CUDA cores.

- High‑Bandwidth Memory (HBM3 or Above): Increased memory bandwidth reduces data transfer delays, enabling real‑time processing of large datasets and models.

- Scalable Interconnect Fabric: High‑speed interconnects such as Nvidia NVLink provide bandwidth‑rich communication paths between GPUs, ensuring efficient distributed training.

- Custom AI Accelerators: Tailored processing units for specific workloads — such as sparse tensor acceleration or transformer inference — further enhance efficiency.

These innovations collectively form a hardware ecosystem capable of supporting increasingly complex AI systems.

2.2 Unified Compute Architecture

Nvidia’s next‑gen hardware embraces a unified compute architecture that supports training, inference, and deployment workloads in a cohesive framework. This paradigm simplifies the AI development pipeline by:

- Eliminating performance fragmentation across different stages of development.

- Enabling seamless scaling from single‑device experimentation to large‑scale distributed deployments.

- Providing consistency in software frameworks and optimization libraries.

2.3 Power and Efficiency Improvements

Energy consumption remains a paramount concern for AI infrastructure operators. Nvidia’s hardware addresses this by integrating:

- Dynamic power scaling capabilities.

- Improved performance‑per‑watt metrics.

- Hardware‑level support for sparsity and reduced precision techniques that retain model accuracy while lowering computational load.

These advances are critical for data centers aiming to balance performance with sustainability goals.

3. New Nvidia AI Models: Expanding the Frontier of Intelligence

While hardware lays the foundation, AI models are the superstructure that extract value from computational resources. Nvidia’s next‑generation initiative also includes a suite of proprietary and open AI models optimized for its hardware.

3.1 Next‑Gen Large Language Models (LLMs)

Nvidia’s next‑gen LLMs are engineered to deliver:

- Higher contextual understanding: By incorporating architectural refinements and training on broader datasets.

- Greater multi‑modal capabilities: Enabling seamless integration of text, image, audio, and video processing.

- Hardware‑aware optimization: Tailored to exploit tensor core advancements and high bandwidth memory pathways.

These models serve as foundational blocks for applications such as advanced search, content generation, summarization systems, and intelligent agents.

3.2 Domain‑Specific AI Models

Beyond general‑purpose LLMs, Nvidia’s model ecosystem includes domain‑specific variants optimized for:

- Healthcare analytics and diagnostics

- Financial modeling and risk assessment

- Scientific simulations (e.g., protein folding, material science)

- Robotics and autonomous systems

Domain tuning enables significant performance and accuracy gains by incorporating domain knowledge, data characteristics, and task‑specific optimizations.

3.3 Model Compression and Efficient Inference

To extend the reach of powerful AI models to resource‑constrained environments (e.g., edge devices, embedded systems), Nvidia emphasizes model compression techniques such as:

- Quantization

- Pruning

- Knowledge distillation

Through these, models achieve faster inference with reduced memory footprints while maintaining accuracy suitable for real‑world tasks.

4. The Software Stack: Enabling Efficient AI Development

Hardware and models are only as effective as the software tools that orchestrate them. Nvidia’s AI ecosystem includes a robust software stack designed to streamline development, optimization, and deployment.

4.1 CUDA and cuDNN Foundations

Nvidia’s proprietary Compute Unified Device Architecture (CUDA) remains the cornerstone for GPU programming. Alongside CUDA, the CUDA Deep Neural Network library (cuDNN) provides highly optimized routines for deep learning primitives, ensuring peak performance on Nvidia hardware.

4.2 AI Framework Integrations

Leading AI frameworks such as TensorFlow, PyTorch, JAX, and MXNet are deeply integrated with Nvidia’s hardware acceleration features. Framework‑specific accelerators and plugins (e.g., TensorRT for inference optimization) facilitate:

- Automated kernel acceleration

- Optimized graph execution

- Reduced development friction through high‑level APIs

4.3 Fleet‑Scale Orchestration with Nvidia AI Enterprise

For enterprise environments, Nvidia AI Enterprise provides:

- Cluster management tools

- Monitoring and telemetry

- Workload scheduling

- Secure multi‑tenant support

This platform enables IT teams to efficiently manage AI workloads across heterogeneous infrastructure.

4.4 Simulation and Digital Twins

Nvidia’s Omniverse platform enables realistic simulation environments for robotics, autonomous vehicles, and digital twin applications. By integrating physics‑accurate simulations with AI training pipelines, developers can:

- Generate rich synthetic data

- Test scenarios in virtual environments

- Validate models prior to real‑world deployment

5. Data Center Transformations: Scaling for the Future

5.1 Modular AI Supercomputing Platforms

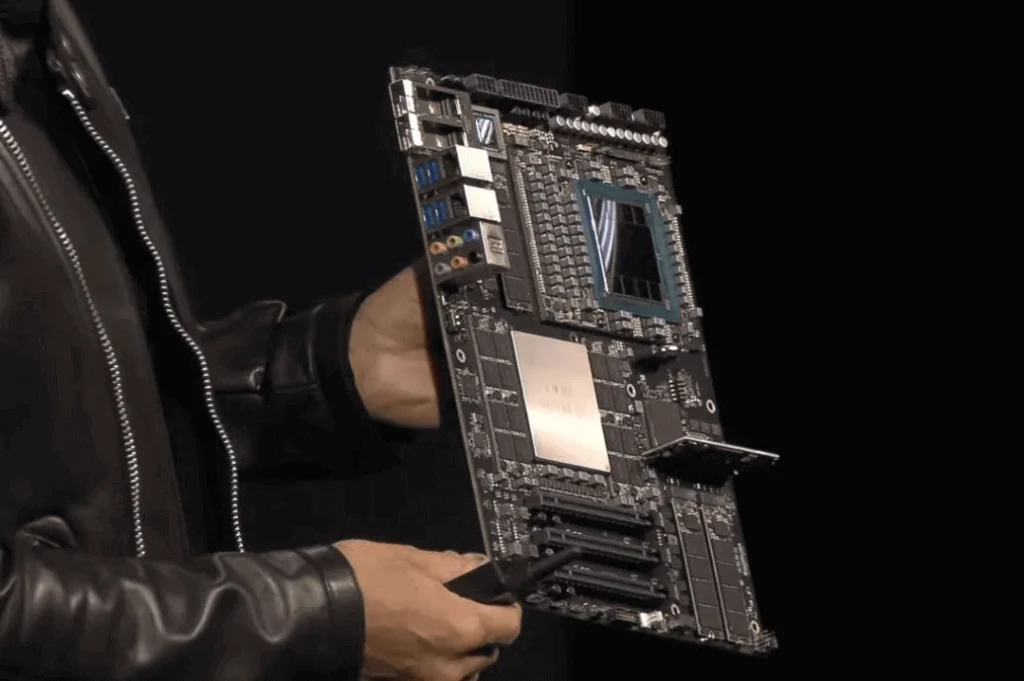

Nvidia’s data center offerings include modular systems such as DGX SuperPODs and custom AI racks designed to deliver exascale‑class performance. Key attributes include:

- Optimized cooling solutions

- Dense GPU interconnectivity

- Redundant power systems

Such infrastructures reduce bottlenecks in large‑scale distributed training and inference.

5.2 Integration with Cloud AI Services

Cloud service providers (CSPs) have integrated Nvidia’s next‑generation hardware into their AI accelerators, offering AI as a service (AIaaS) to enterprises. These services democratize access to cutting‑edge compute without requiring capital‑intensive on‑premises deployments.

5.3 Edge AI and Hybrid Deployments

Recognizing that latency, privacy, and connectivity constraints often dictate localized processing, Nvidia supports a hybrid compute paradigm where:

- Core AI training occurs in centralized data centers

- Inference and real‑time processing occur at the edge

Edge‑optimized hardware (such as Jetson family devices) complements the data center ecosystem, enabling use cases like autonomous vehicles, robotics, and smart infrastructure.

6. Real‑World Applications and Impact

6.1 Healthcare Innovations

Next‑generation AI hardware and models are accelerating healthcare breakthroughs, including:

- Medical image analysis with sub‑millimeter resolution

- Predictive patient monitoring systems

- Drug discovery pipelines using AI‑driven simulations

These applications benefit from high throughput and precision AI processing.

6.2 Autonomous Systems and Robotics

AI models trained on vast datasets within Nvidia’s accelerated environments power capabilities such as:

- Real‑time object detection and scene understanding

- Adaptive motion planning

- Human‑robot interaction with contextual awareness

Hardware scalability ensures these systems can operate reliably in complex real‑world conditions.

6.3 Scientific Discovery

AI‑driven simulation and modeling accelerate scientific research across physics, climate modeling, genomics, and materials science. For instance:

- Quantum simulations that were previously impractical

- Fast approximation of complex differential equations

- AI‑augmented experimental planning

These contributions underscore AI’s role as an indispensable scientific tool.

7. Comparing the Competitive Landscape

7.1 GPU vs. Alternative AI Accelerators

While Nvidia’s GPUs remain the dominant AI accelerator class, alternative architectures such as:

- TPUs (Tensor Processing Units)

- Custom ASICs

- FPGA‑based accelerators

have emerged. Each offers trade‑offs in performance, cost, and flexibility. Nvidia’s strategic advantage stems from:

- Mature software ecosystem

- Broad industry adoption

- Ongoing architectural innovation

7.2 Collaborative Ecosystems and Partnerships

Nvidia collaborates with leading cloud providers, research institutions, and industry partners to:

- Validate real‑world performance

- Co‑optimize software and hardware

- Drive standards for interoperability

This ecosystem approach accelerates the adoption of cutting‑edge AI infrastructure.

8. Challenges and Considerations

8.1 Cost and Accessibility

High‑end AI hardware represents significant investment. Strategies to mitigate cost barriers include:

- Shared cloud access

- AI research grants

- Open‑source model availability

8.2 Ethical and Responsible AI

Powerful AI models raise concerns regarding:

- Bias and fairness in model outputs

- Misuse for misinformation or harmful content

- Data privacy and security

Nvidia advocates for responsible AI development through transparent guidelines, toolkits for bias detection, and partnerships with ethics research groups.

8.3 Sustainability and Energy Consumption

Even with efficiency gains, large AI workloads consume substantial energy. Best practices for sustainability include:

- Dynamic workload scheduling

- Power‑aware resource allocation

- Renewable energy integration

9. Future Directions

Looking ahead, AI hardware and model innovation will likely focus on:

- Ultra‑high‑bandwidth memory technologies

- Optical and quantum‑inspired computing

- AI hardware that self‑optimizes based on workload

- Federated learning across distributed devices

- Adaptive models that evolve from continuous learning

Nvidia’s roadmap, in concert with industry trends, points toward increasingly capable, efficient, and autonomous AI systems that integrate deeply into technological and societal frameworks.

Conclusion

Nvidia’s announcement of its next‑generation AI hardware and models marks a pivotal moment in the evolution of artificial intelligence infrastructure. By pushing the limits of performance, efficiency, and scalability, Nvidia is enabling a new era of AI innovation — one where even the most demanding computational tasks become tractable and where AI systems can be seamlessly deployed across cloud, enterprise, and edge environments.

From supporting breakthroughs in scientific research to driving practical applications in healthcare, robotics, and autonomous systems, the impact of these advancements will be profound and far‑reaching. As organizations and developers embrace these technologies, the pace of AI discovery and deployment will only accelerate, ushering in transformative outcomes across industries and society at large.